你当前正在访问 Microsoft Azure Global Edition 技术文档网站。 如果需要访问由世纪互联运营的 Microsoft Azure 中国技术文档网站,请访问 https://docs.azure.cn。

本文介绍如何在 Azure AI Foundry 门户中将语音实时与生成式 AI 和 Azure AI 语音配合使用。

创建并运行一个应用程序,以将语音实时直接与实时语音代理的生成式 AI 模型配合使用。

使用模型直接允许为每个会话指定自定义指令(提示),为动态或实验用例提供更大的灵活性。

如果希望对会话参数进行精细控制,或者需要频繁调整提示或配置,而无需在门户中更新代理,则模型可能更可取。

基于模型的会话的代码在某些方面更简单,因为它不需要管理代理 ID 或特定于代理的设置。

直接模型使用适用于不需要代理级抽象或内置逻辑的方案。

若要改用语音实时 API 和代理,请参阅 语音实时 API 代理快速入门。

先决条件

- 一份 Azure 订阅。 免费创建一个。

- 在一个受支持的区域中创建的 Azure AI Foundry 资源 。 有关区域可用性的详细信息,请参阅 语音实时概述文档。

小窍门

若要使用语音直播,无需使用 Azure AI Foundry 资源部署音频模型。 语音实时为完全托管,模型会自动为你部署。 有关模型可用性的详细信息,请参阅 语音实时概述文档。

在语音测试环境中实时试用语音功能

若要试用语音实时演示,请执行以下步骤:

转到 Azure AI Foundry 中的项目。

从左窗格中选择“游乐场地”。

在 “语音场 ”磁贴中,选择“ 试用语音场”。

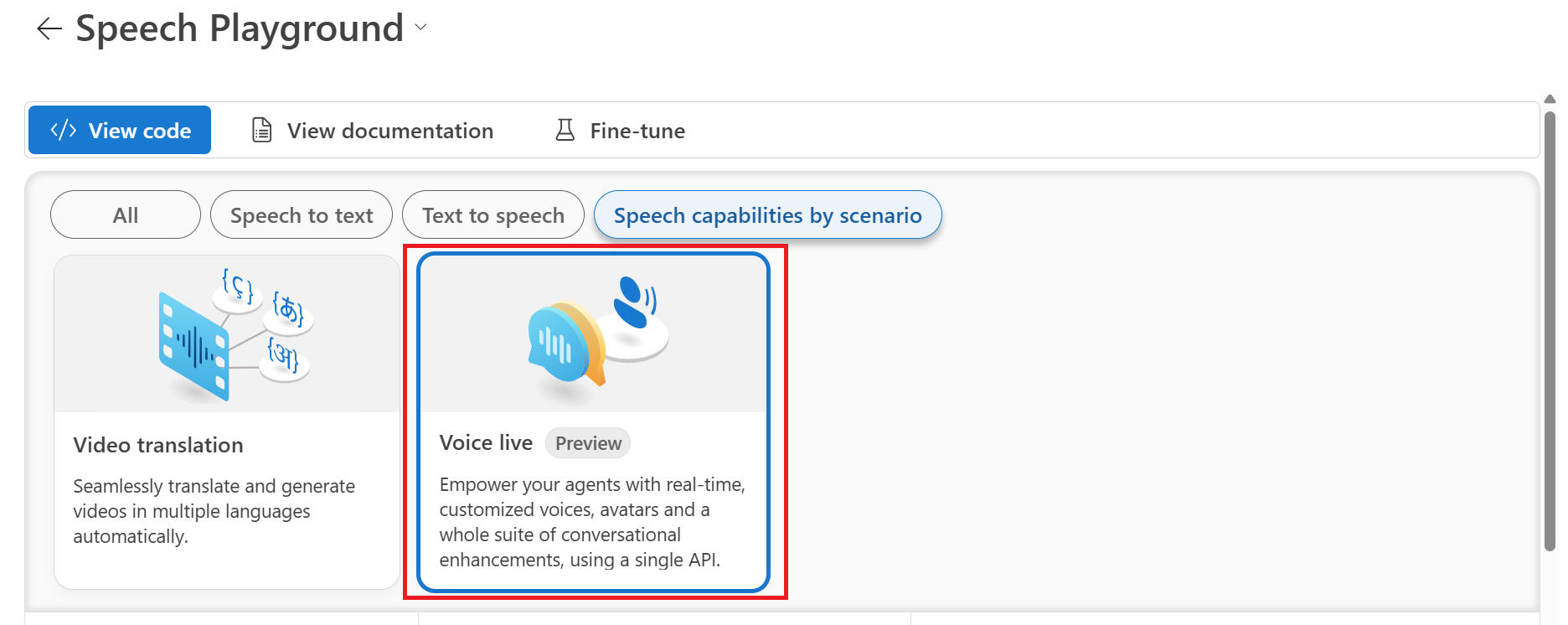

选择按场景选择语音功能>语音直播。

选择一个示例方案,例如 休闲聊天。

选择 “开始” 以开始与聊天代理聊天。

选择 “结束 ”以结束聊天会话。

通过 Configuration>GenAI>生成 AI 模型从下拉列表中选择新的生成 AI 模型。

注释

还可以选择你在 Agents 沙箱中配置的代理。 有关详细信息,请参阅使用 Foundry 代理的语音实时快速入门。

根据需要编辑其他设置,例如 响应说明、 语音和 朗读率。

选择 “开始 ”以再次开始说话,然后选择 “结束 ”以结束聊天会话。

本文介绍如何使用适用于 Python 的 VoiceLive SDK 将 Azure AI 语音的实施语音功能与 Azure AI Foundry 模型配合使用。

创建并运行一个应用程序,以将语音实时直接与实时语音代理的生成式 AI 模型配合使用。

使用模型直接允许为每个会话指定自定义指令(提示),为动态或实验用例提供更大的灵活性。

如果希望对会话参数进行精细控制,或者需要频繁调整提示或配置,而无需在门户中更新代理,则模型可能更可取。

基于模型的会话的代码在某些方面更简单,因为它不需要管理代理 ID 或特定于代理的设置。

直接模型使用适用于不需要代理级抽象或内置逻辑的方案。

若要改用语音实时 API 和代理,请参阅 语音实时 API 代理快速入门。

先决条件

- 一份 Azure 订阅。 免费创建一个。

- Python 3.10 或更高版本。 如果未安装合适的 Python 版本,则可以按照 VS Code Python 教程中的说明操作,这是在操作系统上安装 Python 的最简单方法。

- 在一个受支持的区域中创建的 Azure AI Foundry 资源 。 有关区域可用性的详细信息,请参阅 区域支持。

小窍门

若要使用语音直播,无需使用 Azure AI Foundry 资源部署音频模型。 语音实时为完全托管,模型会自动为你部署。 有关模型可用性的详细信息,请参阅 语音实时概述文档。

Microsoft Entra ID 先决条件

若要使用 Microsoft Entra ID 进行推荐的无密钥身份验证,你需要:

- 安装使用 Microsoft Entra ID 进行无密钥身份验证所需的 Azure CLI。

- 将

Cognitive Services User角色分配给用户帐户。 你可以在 Azure 门户的“访问控制(IAM)”“添加角色分配”下分配角色。>

设置

创建新文件夹

voice-live-quickstart,并使用以下命令转到快速入门文件夹:mkdir voice-live-quickstart && cd voice-live-quickstart创建虚拟环境。 如果已安装 Python 3.10 或更高版本,则可以使用以下命令创建虚拟环境:

py -3 -m venv .venv .venv\scripts\activate激活 Python 环境意味着当通过命令行运行

python或pip时,你将使用应用程序的.venv文件夹中包含的 Python 解释器。 可以使用deactivate命令退出 python 虚拟环境,并在需要时重新激活它。小窍门

建议你创建并激活一个新的 Python 环境,用于安装本教程所需的包。 请勿将包安装到你的全局 Python 安装中。 安装 Python 包时,请务必使用虚拟或 Conda 环境,否则可能会中断 Python 的全局安装。

创建名为 requirements.txt的文件。 将以下包添加到文件:

aiohttp==3.11.18 azure-core==1.35.0 azure-identity==1.22.0 certifi==2025.4.26 cffi==1.17.1 cryptography==44.0.3 numpy==2.2.5 pycparser==2.22 python-dotenv==1.1.0 pyaudio requests==2.32.3 sounddevice==0.5.1 typing_extensions==4.13.2 urllib3==2.4.0 websocket-client==1.8.0 azure-ai-voicelive安装这些软件包:

pip install -r requirements.txt

检索资源信息

在要在其中运行代码的文件夹中创建一 .env 个名为的新文件。

在 .env 文件中,添加以下用于身份验证的环境变量:

AZURE_VOICELIVE_ENDPOINT=<your_endpoint>

AZURE_VOICELIVE_MODEL=<your_model>

AZURE_VOICELIVE_API_VERSION=2025-10-01

AZURE_VOICELIVE_API_KEY=<your_api_key> # Only required if using API key authentication

将默认值替换为实际终结点、模型、API 版本和 API 密钥。

| 变量名称 | 价值 |

|---|---|

AZURE_VOICELIVE_ENDPOINT |

从 Azure 门户检查资源时,可在“密钥和终结点”部分中找到此值。 |

AZURE_VOICELIVE_MODEL |

要使用的模型。 例如,gpt-4o 或 gpt-4o-mini-realtime-preview。 有关模型可用性的详细信息,请参阅 Voice Live API 概述文档。 |

AZURE_VOICELIVE_API_VERSION |

要使用的 API 版本。 例如,2025-10-01。 |

启动会话

本快速入门中的示例代码使用 Microsoft Entra ID 或 API 密钥进行身份验证。 可以将脚本参数设置为 API 密钥或访问令牌。

使用以下代码创建

voice-live-quickstart.py文件:import os import sys import asyncio import base64 import argparse import signal import threading import queue from azure.ai.voicelive.models import ServerEventType from typing import Union, Optional, TYPE_CHECKING, cast from concurrent.futures import ThreadPoolExecutor import logging # Audio processing imports try: import pyaudio except ImportError: print("This sample requires pyaudio. Install with: pip install pyaudio") sys.exit(1) ## Change to the directory where this script is located os.chdir(os.path.dirname(os.path.abspath(__file__))) # Environment variable loading try: from dotenv import load_dotenv load_dotenv('.\.env', override=True) except ImportError: print("Note: python-dotenv not installed. Using existing environment variables.") # Azure VoiceLive SDK imports from azure.core.credentials import AzureKeyCredential, TokenCredential from azure.identity import DefaultAzureCredential, InteractiveBrowserCredential from azure.ai.voicelive.aio import connect if TYPE_CHECKING: # Only needed for type checking; avoids runtime import issues from azure.ai.voicelive.aio import VoiceLiveConnection from azure.ai.voicelive.models import ( RequestSession, ServerVad, AzureStandardVoice, Modality, InputAudioFormat, OutputAudioFormat, ) # Set up logging ## Add folder for logging if not os.path.exists('logs'): os.makedirs('logs') ## Add timestamp for logfiles from datetime import datetime timestamp = datetime.now().strftime("%Y-%m-%d_%H-%M-%S") ## Set up logging logging.basicConfig( filename=f'logs/{timestamp}_voicelive.log', filemode="w", format='%(asctime)s:%(name)s:%(levelname)s:%(message)s', level=logging.INFO ) logger = logging.getLogger(__name__) class AudioProcessor: """ Handles real-time audio capture and playback for the voice assistant. Threading Architecture: - Main thread: Event loop and UI - Capture thread: PyAudio input stream reading - Send thread: Async audio data transmission to VoiceLive - Playback thread: PyAudio output stream writing """ def __init__(self, connection): self.connection = connection self.audio = pyaudio.PyAudio() # Audio configuration - PCM16, 24kHz, mono as specified self.format = pyaudio.paInt16 self.channels = 1 self.rate = 24000 self.chunk_size = 1024 # Capture and playback state self.is_capturing = False self.is_playing = False self.input_stream = None self.output_stream = None # Audio queues and threading self.audio_queue: "queue.Queue[bytes]" = queue.Queue() self.audio_send_queue: "queue.Queue[str]" = queue.Queue() # base64 audio to send self.executor = ThreadPoolExecutor(max_workers=3) self.capture_thread: Optional[threading.Thread] = None self.playback_thread: Optional[threading.Thread] = None self.send_thread: Optional[threading.Thread] = None self.loop: Optional[asyncio.AbstractEventLoop] = None # Store the event loop logger.info("AudioProcessor initialized with 24kHz PCM16 mono audio") async def start_capture(self): """Start capturing audio from microphone.""" if self.is_capturing: return # Store the current event loop for use in threads self.loop = asyncio.get_event_loop() self.is_capturing = True try: self.input_stream = self.audio.open( format=self.format, channels=self.channels, rate=self.rate, input=True, frames_per_buffer=self.chunk_size, stream_callback=None, ) self.input_stream.start_stream() # Start capture thread self.capture_thread = threading.Thread(target=self._capture_audio_thread) self.capture_thread.daemon = True self.capture_thread.start() # Start audio send thread self.send_thread = threading.Thread(target=self._send_audio_thread) self.send_thread.daemon = True self.send_thread.start() logger.info("Started audio capture") except Exception as e: logger.error(f"Failed to start audio capture: {e}") self.is_capturing = False raise def _capture_audio_thread(self): """Audio capture thread - runs in background.""" while self.is_capturing and self.input_stream: try: # Read audio data audio_data = self.input_stream.read(self.chunk_size, exception_on_overflow=False) if audio_data and self.is_capturing: # Convert to base64 and queue for sending audio_base64 = base64.b64encode(audio_data).decode("utf-8") self.audio_send_queue.put(audio_base64) except Exception as e: if self.is_capturing: logger.error(f"Error in audio capture: {e}") break def _send_audio_thread(self): """Audio send thread - handles async operations from sync thread.""" while self.is_capturing: try: # Get audio data from queue (blocking with timeout) audio_base64 = self.audio_send_queue.get(timeout=0.1) if audio_base64 and self.is_capturing and self.loop: # Schedule the async send operation in the main event loop future = asyncio.run_coroutine_threadsafe( self.connection.input_audio_buffer.append(audio=audio_base64), self.loop ) # Don't wait for completion to avoid blocking except queue.Empty: continue except Exception as e: if self.is_capturing: logger.error(f"Error sending audio: {e}") break async def stop_capture(self): """Stop capturing audio.""" if not self.is_capturing: return self.is_capturing = False if self.input_stream: self.input_stream.stop_stream() self.input_stream.close() self.input_stream = None if self.capture_thread: self.capture_thread.join(timeout=1.0) if self.send_thread: self.send_thread.join(timeout=1.0) # Clear the send queue while not self.audio_send_queue.empty(): try: self.audio_send_queue.get_nowait() except queue.Empty: break logger.info("Stopped audio capture") async def start_playback(self): """Initialize audio playback system.""" if self.is_playing: return self.is_playing = True try: self.output_stream = self.audio.open( format=self.format, channels=self.channels, rate=self.rate, output=True, frames_per_buffer=self.chunk_size, ) # Start playback thread self.playback_thread = threading.Thread(target=self._playback_audio_thread) self.playback_thread.daemon = True self.playback_thread.start() logger.info("Audio playback system ready") except Exception as e: logger.error(f"Failed to initialize audio playback: {e}") self.is_playing = False raise def _playback_audio_thread(self): """Audio playback thread - runs in background.""" while self.is_playing: try: # Get audio data from queue (blocking with timeout) audio_data = self.audio_queue.get(timeout=0.1) if audio_data and self.output_stream and self.is_playing: self.output_stream.write(audio_data) except queue.Empty: continue except Exception as e: if self.is_playing: logger.error(f"Error in audio playback: {e}") break async def queue_audio(self, audio_data: bytes): """Queue audio data for playback.""" if self.is_playing: self.audio_queue.put(audio_data) async def stop_playback(self): """Stop audio playback and clear queue.""" if not self.is_playing: return self.is_playing = False # Clear the queue while not self.audio_queue.empty(): try: self.audio_queue.get_nowait() except queue.Empty: break if self.output_stream: self.output_stream.stop_stream() self.output_stream.close() self.output_stream = None if self.playback_thread: self.playback_thread.join(timeout=1.0) logger.info("Stopped audio playback") async def cleanup(self): """Clean up audio resources.""" await self.stop_capture() await self.stop_playback() if self.audio: self.audio.terminate() self.executor.shutdown(wait=True) logger.info("Audio processor cleaned up") class BasicVoiceAssistant: """Basic voice assistant implementing the VoiceLive SDK patterns.""" def __init__( self, endpoint: str, credential: Union[AzureKeyCredential, TokenCredential], model: str, voice: str, instructions: str, ): self.endpoint = endpoint self.credential = credential self.model = model self.voice = voice self.instructions = instructions self.connection: Optional["VoiceLiveConnection"] = None self.audio_processor: Optional[AudioProcessor] = None self.session_ready = False self.conversation_started = False async def start(self): """Start the voice assistant session.""" try: logger.info(f"Connecting to VoiceLive API with model {self.model}") # Connect to VoiceLive WebSocket API async with connect( endpoint=self.endpoint, credential=self.credential, model=self.model, ) as connection: conn = connection self.connection = conn # Initialize audio processor ap = AudioProcessor(conn) self.audio_processor = ap # Configure session for voice conversation await self._setup_session() # Start audio systems await ap.start_playback() logger.info("Voice assistant ready! Start speaking...") print("\n" + "=" * 60) print("🎤 VOICE ASSISTANT READY") print("Start speaking to begin conversation") print("Press Ctrl+C to exit") print("=" * 60 + "\n") # Process events await self._process_events() except KeyboardInterrupt: logger.info("Received interrupt signal, shutting down...") except Exception as e: logger.error(f"Connection error: {e}") raise # Cleanup if self.audio_processor: await self.audio_processor.cleanup() async def _setup_session(self): """Configure the VoiceLive session for audio conversation.""" logger.info("Setting up voice conversation session...") # Create strongly typed voice configuration voice_config: Union[AzureStandardVoice, str] if self.voice.startswith("en-US-") or self.voice.startswith("en-CA-") or "-" in self.voice: # Azure voice voice_config = AzureStandardVoice(name=self.voice, type="azure-standard") else: # OpenAI voice (alloy, echo, fable, onyx, nova, shimmer) voice_config = self.voice # Create strongly typed turn detection configuration turn_detection_config = ServerVad(threshold=0.5, prefix_padding_ms=300, silence_duration_ms=500) # Create strongly typed session configuration session_config = RequestSession( modalities=[Modality.TEXT, Modality.AUDIO], instructions=self.instructions, voice=voice_config, input_audio_format=InputAudioFormat.PCM16, output_audio_format=OutputAudioFormat.PCM16, turn_detection=turn_detection_config, ) conn = self.connection assert conn is not None, "Connection must be established before setting up session" await conn.session.update(session=session_config) logger.info("Session configuration sent") async def _process_events(self): """Process events from the VoiceLive connection.""" try: conn = self.connection assert conn is not None, "Connection must be established before processing events" async for event in conn: await self._handle_event(event) except KeyboardInterrupt: logger.info("Event processing interrupted") except Exception as e: logger.error(f"Error processing events: {e}") raise async def _handle_event(self, event): """Handle different types of events from VoiceLive.""" logger.debug(f"Received event: {event.type}") ap = self.audio_processor conn = self.connection assert ap is not None, "AudioProcessor must be initialized" assert conn is not None, "Connection must be established" if event.type == ServerEventType.SESSION_UPDATED: logger.info(f"Session ready: {event.session.id}") self.session_ready = True # Start audio capture once session is ready await ap.start_capture() elif event.type == ServerEventType.INPUT_AUDIO_BUFFER_SPEECH_STARTED: logger.info("🎤 User started speaking - stopping playback") print("🎤 Listening...") # Stop current assistant audio playback (interruption handling) await ap.stop_playback() # Cancel any ongoing response try: await conn.response.cancel() except Exception as e: logger.debug(f"No response to cancel: {e}") elif event.type == ServerEventType.INPUT_AUDIO_BUFFER_SPEECH_STOPPED: logger.info("🎤 User stopped speaking") print("🤔 Processing...") # Restart playback system for response await ap.start_playback() elif event.type == ServerEventType.RESPONSE_CREATED: logger.info("🤖 Assistant response created") elif event.type == ServerEventType.RESPONSE_AUDIO_DELTA: # Stream audio response to speakers logger.debug("Received audio delta") await ap.queue_audio(event.delta) elif event.type == ServerEventType.RESPONSE_AUDIO_DONE: logger.info("🤖 Assistant finished speaking") print("🎤 Ready for next input...") elif event.type == ServerEventType.RESPONSE_DONE: logger.info("✅ Response complete") elif event.type == ServerEventType.ERROR: logger.error(f"❌ VoiceLive error: {event.error.message}") print(f"Error: {event.error.message}") elif event.type == ServerEventType.CONVERSATION_ITEM_CREATED: logger.debug(f"Conversation item created: {event.item.id}") else: logger.debug(f"Unhandled event type: {event.type}") def parse_arguments(): """Parse command line arguments.""" parser = argparse.ArgumentParser( description="Basic Voice Assistant using Azure VoiceLive SDK", formatter_class=argparse.ArgumentDefaultsHelpFormatter, ) parser.add_argument( "--api-key", help="Azure VoiceLive API key. If not provided, will use AZURE_VOICELIVE_API_KEY environment variable.", type=str, default=os.environ.get("AZURE_VOICELIVE_API_KEY"), ) parser.add_argument( "--endpoint", help="Azure VoiceLive endpoint", type=str, default=os.environ.get("AZURE_VOICELIVE_ENDPOINT", "wss://api.voicelive.com/v1"), ) parser.add_argument( "--model", help="VoiceLive model to use", type=str, default=os.environ.get("AZURE_VOICELIVE_MODEL", "gpt-realtime"), ) parser.add_argument( "--voice", help="Voice to use for the assistant. E.g. alloy, echo, fable, en-US-AvaNeural, en-US-GuyNeural", type=str, default=os.environ.get("AZURE_VOICELIVE_VOICE", "en-US-Ava:DragonHDLatestNeural"), ) parser.add_argument( "--instructions", help="System instructions for the AI assistant", type=str, default=os.environ.get( "AZURE_VOICELIVE_INSTRUCTIONS", "You are a helpful AI assistant. Respond naturally and conversationally. " "Keep your responses concise but engaging.", ), ) parser.add_argument( "--use-token-credential", help="Use Azure token credential instead of API key", action="store_true", default=True ) parser.add_argument("--verbose", help="Enable verbose logging", action="store_true") return parser.parse_args() async def main(): """Main function.""" args = parse_arguments() # Set logging level if args.verbose: logging.getLogger().setLevel(logging.DEBUG) # Validate credentials if not args.api_key and not args.use_token_credential: print("❌ Error: No authentication provided") print("Please provide an API key using --api-key or set AZURE_VOICELIVE_API_KEY environment variable,") print("or use --use-token-credential for Azure authentication.") sys.exit(1) try: # Create client with appropriate credential credential: Union[AzureKeyCredential, TokenCredential] if args.use_token_credential: credential = InteractiveBrowserCredential() # or DefaultAzureCredential() if needed logger.info("Using Azure token credential") else: credential = AzureKeyCredential(args.api_key) logger.info("Using API key credential") # Create and start voice assistant assistant = BasicVoiceAssistant( endpoint=args.endpoint, credential=credential, model=args.model, voice=args.voice, instructions=args.instructions, ) # Setup signal handlers for graceful shutdown def signal_handler(sig, frame): logger.info("Received shutdown signal") raise KeyboardInterrupt() signal.signal(signal.SIGINT, signal_handler) signal.signal(signal.SIGTERM, signal_handler) # Start the assistant await assistant.start() except KeyboardInterrupt: print("\n👋 Voice assistant shut down. Goodbye!") except Exception as e: logger.error(f"Fatal error: {e}") print(f"❌ Error: {e}") sys.exit(1) if __name__ == "__main__": # Check for required dependencies dependencies = { "pyaudio": "Audio processing", "azure.ai.voicelive": "Azure VoiceLive SDK", "azure.core": "Azure Core libraries", } missing_deps = [] for dep, description in dependencies.items(): try: __import__(dep.replace("-", "_")) except ImportError: missing_deps.append(f"{dep} ({description})") if missing_deps: print("❌ Missing required dependencies:") for dep in missing_deps: print(f" - {dep}") print("\nInstall with: pip install azure-ai-voicelive pyaudio python-dotenv") sys.exit(1) # Check audio system try: p = pyaudio.PyAudio() # Check for input devices input_devices = [ i for i in range(p.get_device_count()) if cast(Union[int, float], p.get_device_info_by_index(i).get("maxInputChannels", 0) or 0) > 0 ] # Check for output devices output_devices = [ i for i in range(p.get_device_count()) if cast(Union[int, float], p.get_device_info_by_index(i).get("maxOutputChannels", 0) or 0) > 0 ] p.terminate() if not input_devices: print("❌ No audio input devices found. Please check your microphone.") sys.exit(1) if not output_devices: print("❌ No audio output devices found. Please check your speakers.") sys.exit(1) except Exception as e: print(f"❌ Audio system check failed: {e}") sys.exit(1) print("🎙️ Basic Voice Assistant with Azure VoiceLive SDK") print("=" * 50) # Run the assistant asyncio.run(main())使用以下命令登录到 Azure:

az login运行该 Python 文件。

python voice-live-quickstart.pyVoice Live API 开始根据模型的初始响应返回音频。 可以通过说话来中断模型。 输入“q”退出对话。

输出

脚本的输出将打印到控制台。 你会看到指示系统状态的消息。 音频通过扬声器或耳机播放。

============================================================

🎤 VOICE ASSISTANT READY

Start speaking to begin conversation

Press Ctrl+C to exit

============================================================

🎤 Listening...

🤔 Processing...

🎤 Ready for next input...

🎤 Listening...

🤔 Processing...

🎤 Ready for next input...

🎤 Listening...

🤔 Processing...

🎤 Ready for next input...

🎤 Listening...

🤔 Processing...

🎤 Listening...

🎤 Ready for next input...

🤔 Processing...

🎤 Ready for next input...

运行的脚本将创建一个在文件夹中命名 <timestamp>_voicelive.log 的 logs 日志文件。

默认日志级别设置为INFO,但您可以通过命令行参数--verbose在启动时修改,或者通过如下所示的代码更新日志配置来更改日志级别:

logging.basicConfig(

filename=f'logs/{timestamp}_voicelive.log',

filemode="w",

level=logging.DEBUG,

format='%(asctime)s:%(name)s:%(levelname)s:%(message)s'

日志文件包含有关与语音实时 API 的连接的信息,包括请求和响应数据。 可以查看日志文件以查看聊天的详细信息。

2025-10-02 14:47:37,901:__main__:INFO:Using Azure token credential

2025-10-02 14:47:37,901:__main__:INFO:Connecting to VoiceLive API with model gpt-realtime

2025-10-02 14:47:37,901:azure.core.pipeline.policies.http_logging_policy:INFO:Request URL: 'https://login.microsoftonline.com/organizations/v2.0/.well-known/openid-configuration'

Request method: 'GET'

Request headers:

'User-Agent': 'azsdk-python-identity/1.22.0 Python/3.11.9 (Windows-10-10.0.26200-SP0)'

No body was attached to the request

2025-10-02 14:47:38,057:azure.core.pipeline.policies.http_logging_policy:INFO:Response status: 200

Response headers:

'Date': 'Thu, 02 Oct 2025 21:47:37 GMT'

'Content-Type': 'application/json; charset=utf-8'

'Content-Length': '1641'

'Connection': 'keep-alive'

'Cache-Control': 'max-age=86400, private'

'Strict-Transport-Security': 'REDACTED'

'X-Content-Type-Options': 'REDACTED'

'Access-Control-Allow-Origin': 'REDACTED'

'Access-Control-Allow-Methods': 'REDACTED'

'P3P': 'REDACTED'

'x-ms-request-id': 'f81adfa1-8aa3-4ab6-a7b8-908f411e0d00'

'x-ms-ests-server': 'REDACTED'

'x-ms-srs': 'REDACTED'

'Content-Security-Policy-Report-Only': 'REDACTED'

'Cross-Origin-Opener-Policy-Report-Only': 'REDACTED'

'Reporting-Endpoints': 'REDACTED'

'X-XSS-Protection': 'REDACTED'

'Set-Cookie': 'REDACTED'

'X-Cache': 'REDACTED'

2025-10-02 14:47:42,105:azure.core.pipeline.policies.http_logging_policy:INFO:Request URL: 'https://login.microsoftonline.com/organizations/oauth2/v2.0/token'

Request method: 'POST'

Request headers:

'Accept': 'application/json'

'x-client-sku': 'REDACTED'

'x-client-ver': 'REDACTED'

'x-client-os': 'REDACTED'

'x-ms-lib-capability': 'REDACTED'

'client-request-id': 'REDACTED'

'x-client-current-telemetry': 'REDACTED'

'x-client-last-telemetry': 'REDACTED'

'X-AnchorMailbox': 'REDACTED'

'User-Agent': 'azsdk-python-identity/1.22.0 Python/3.11.9 (Windows-10-10.0.26200-SP0)'

A body is sent with the request

2025-10-02 14:47:42,466:azure.core.pipeline.policies.http_logging_policy:INFO:Response status: 200

Response headers:

'Date': 'Thu, 02 Oct 2025 21:47:42 GMT'

'Content-Type': 'application/json; charset=utf-8'

'Content-Length': '6587'

'Connection': 'keep-alive'

'Cache-Control': 'no-store, no-cache'

'Pragma': 'no-cache'

'Expires': '-1'

'Strict-Transport-Security': 'REDACTED'

'X-Content-Type-Options': 'REDACTED'

'P3P': 'REDACTED'

'client-request-id': 'REDACTED'

'x-ms-request-id': '2e82e728-22c0-4568-b3ed-f00ec79a2500'

'x-ms-ests-server': 'REDACTED'

'x-ms-clitelem': 'REDACTED'

'x-ms-srs': 'REDACTED'

'Content-Security-Policy-Report-Only': 'REDACTED'

'Cross-Origin-Opener-Policy-Report-Only': 'REDACTED'

'Reporting-Endpoints': 'REDACTED'

'X-XSS-Protection': 'REDACTED'

'Set-Cookie': 'REDACTED'

'X-Cache': 'REDACTED'

2025-10-02 14:47:42,467:azure.identity._internal.interactive:INFO:InteractiveBrowserCredential.get_token succeeded

2025-10-02 14:47:42,884:__main__:INFO:AudioProcessor initialized with 24kHz PCM16 mono audio

2025-10-02 14:47:42,884:__main__:INFO:Setting up voice conversation session...

2025-10-02 14:47:42,887:__main__:INFO:Session configuration sent

2025-10-02 14:47:42,943:__main__:INFO:Audio playback system ready

2025-10-02 14:47:42,943:__main__:INFO:Voice assistant ready! Start speaking...

2025-10-02 14:47:42,975:__main__:INFO:Session ready: sess_CMLRGjWnakODcHn583fXf

2025-10-02 14:47:42,994:__main__:INFO:Started audio capture

2025-10-02 14:47:47,513:__main__:INFO:\U0001f3a4 User started speaking - stopping playback

2025-10-02 14:47:47,593:__main__:INFO:Stopped audio playback

2025-10-02 14:47:51,757:__main__:INFO:\U0001f3a4 User stopped speaking

2025-10-02 14:47:51,813:__main__:INFO:Audio playback system ready

2025-10-02 14:47:51,816:__main__:INFO:\U0001f916 Assistant response created

2025-10-02 14:47:58,009:__main__:INFO:\U0001f916 Assistant finished speaking

2025-10-02 14:47:58,009:__main__:INFO:\u2705 Response complete

2025-10-02 14:48:07,309:__main__:INFO:Received shutdown signal

本文介绍如何使用适用于 C# 的 VoiceLive SDK 通过 Azure AI Foundry 模型实时使用 Azure AI 语音语音。

创建并运行一个应用程序,以将语音实时直接与实时语音代理的生成式 AI 模型配合使用。

使用模型直接允许为每个会话指定自定义指令(提示),为动态或实验用例提供更大的灵活性。

如果希望对会话参数进行精细控制,或者需要频繁调整提示或配置,而无需在门户中更新代理,则模型可能更可取。

基于模型的会话的代码在某些方面更简单,因为它不需要管理代理 ID 或特定于代理的设置。

直接模型使用适用于不需要代理级抽象或内置逻辑的方案。

若要改用语音实时 API 和代理,请参阅 语音实时 API 代理快速入门。

先决条件

- 一份 Azure 订阅。 免费创建一个。

- 在一个受支持的区域中创建的 Azure AI Foundry 资源 。 有关区域可用性的详细信息,请参阅 语音实时概述文档。

- 已安装 .NET SDK 版本 6.0 或更高版本。

启动语音对话

按照以下步骤创建控制台应用程序并安装语音 SDK。

在需要新项目的文件夹中打开命令提示符窗口。 运行以下命令,使用 .NET CLI 创建控制台应用程序。

dotnet new console该命令会在你的项目目录中创建 Program.cs 文件。

使用 .NET CLI 在新项目中安装 Voice Live SDK。

dotnet add package Azure.AI.VoiceLive --prerelease在要在其中运行代码的文件夹中创建一

appsettings.json个名为的新文件。 在该文件中,添加以下 JSON 内容:{ "VoiceLive": { "ApiKey": "YOUR_VOICELIVE_API_KEY", "Endpoint": "wss://api.voicelive.com/v1", "Model": "gpt-realtime", "Voice": "en-US-Ava:DragonHDLatestNeural", "Instructions": "You are a professional customer service representative for TechCorp. You have access to customer databases and order systems. Always be polite, helpful, and efficient. When customers ask about orders, accounts, or need to schedule service, use the available tools to provide accurate, real-time information. Keep your responses concise but thorough." }, "Logging": { "LogLevel": { "Default": "Information", "Azure.AI.VoiceLive": "Debug" } } }将

ApiKey值替换为 AI Foundry API 密钥,并将Endpoint值替换为资源终结点。 还可以根据需要更改模型、语音和说明值。将