Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Defender for Storage supports different ways to handle malicious files. Select the remediation option that fits your scenario:

- Built-in remediation capabilities

- Automated workflows to move or remove malicious files

- Automated workflows to move or ingest clean files to another destination

With malware scanning, build your automated remediation using these scan result options:

- Defender for Cloud security alerts

- Event Grid events

- Blob index tags

Tip

Try the hands-on lab to explore malware scanning in Defender for Storage. The Ninja training module gives step-by-step instructions to:

- Set up malware scanning

- Test the feature end to end

- Set up remediation responses

This lab is part of the Microsoft Defender for Cloud training series and gives practical experience with security features.

Remediation options for malware detection

Built-in automated malware remediation for malicious blobs

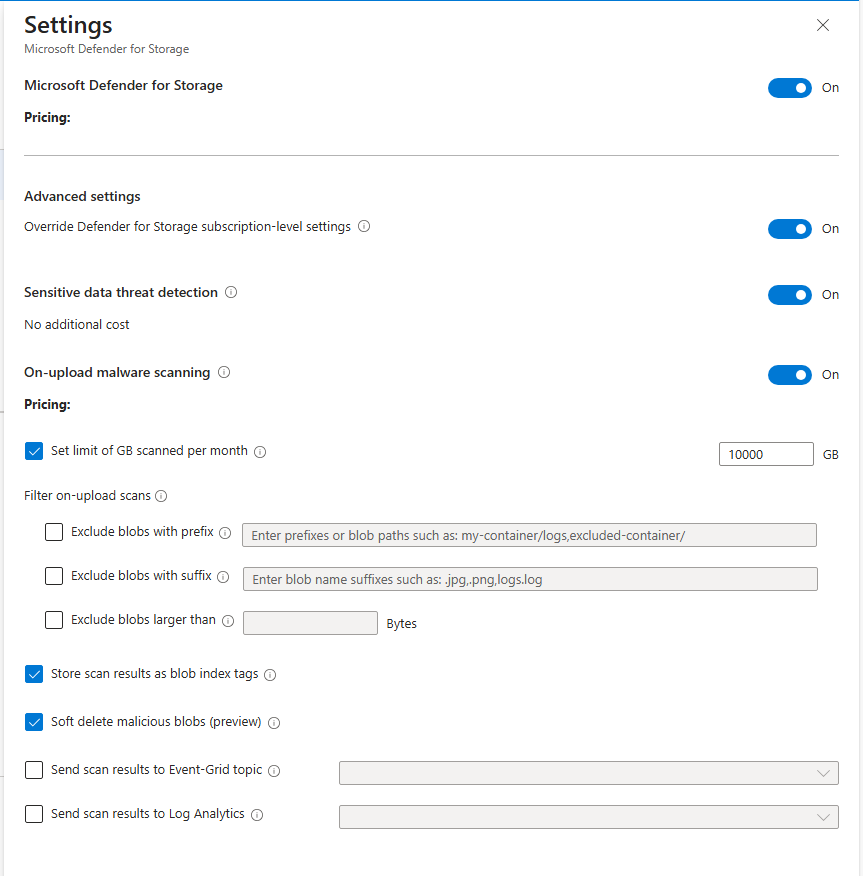

Defender for Storage Malware Protection has a built-in capability that simplifies and automates the remediation process for malicious blobs, so you reduce the risk of malware distribution. When Defender for Storage detects a malicious blob during on-upload or on-demand malware scanning, it automatically starts a soft delete to safely quarantine the blob and keep it recoverable for further analysis.

If you didn't already enable Enable soft delete for blobs on the storage account, Defender for Storage tries to enable it when you turn on the Soft delete malicious blobs feature. This feature reduces setup steps and improves security responsiveness immediately.

Use cases

Automatically remediate malware distribution by:

- Soft deleting the blob to prevent access

- Retaining the blob for investigation and recovery

- Removing the need for external automation or manual steps

Let security admins or SOC analysts keep storage clean with minimal manual effort

Let organizations quickly fix threats without complex workflows

Feature notes

Soft delete malicious blobs works only for malicious blobs. It's off by default.

You can turn on soft delete malicious blobs at the subscription or storage account level.

When you soft delete a blob, it stays in the same blob container. You can restore the blob within the retention period. For more information, see Soft delete for blobs.

If you turn on Versioning for Blobs on your storage account, see Manage and restore soft delete for blobs to learn how to restore a soft deleted blob.

The retention period defaults to seven days if you turn on the soft delete malicious blobs feature, but you can change it (range: 1–365 days). You can change the default retention period in your storage account settings.

If you turn off the soft delete property setting on the storage account after turning it on, malicious blobs aren't deleted. In this case, Defender for Storage doesn't turn soft delete back on, and it creates an alert.

Deletion can fail because of misconfiguration or missing permissions. The alert shows the reason for the failure.

Storing the soft deleted blob during the retention period costs the same as active data. For more information, see Soft delete for blobs.

If you restore a blob, Azure counts it as a write transaction. For more information, see Map each REST operation to a price.

Setting up custom remediation for malicious blobs

Use these options to automate your remediation:

Block access to unscanned or malicious files by using ABAC (attribute-based access control)

With Microsoft Entra Attribute-based access control (ABAC) authorization, you can block access to malicious and unscanned files. You can set conditional access to blobs based on the scanning results, and allow applications and users to access only scanned files that are clean.

To set it up, follow the instructions in the following video.

Delete or move a malicious blob

Use code or workflow automation to delete or move malicious files to quarantine.

Prepare your environment for delete or move

Delete the malicious file - Before setting up automated deletion, enable soft delete on the storage account. It allows you to "undelete" files if there are false positives or in cases where security professionals want to investigate the malicious files.

Move the malicious file to quarantine - Move files to a dedicated storage container or storage account that are considered as "quarantine". You might want only certain users, such as a security admin or a SOC analyst, to have permission to access this dedicated container or storage account.

- Using Microsoft Entra ID to control access to blob storage is a best practice. To control access to the dedicated quarantine storage container, you can use container-level role assignments using Microsoft Entra role-based access control (RBAC). Users with storage account-level permissions might still be able to access the "quarantine" container. You can either edit their permissions to be container-level or choose a different approach and move the malicious file to a dedicated storage account.

- If you must use other methods, such as SAS (shared access signatures) tokens on the protected storage account, move malicious files to another storage account (quarantine). Then, grant Microsoft Entra permission to access the quarantined storage account.

Set up automation

Option 1: Logic App based on Microsoft Defender for Cloud security alerts

Logic App based responses are a simple, no-code approach to setting up response. However, the response time is slower than the event-driven code-based approach.

Deploy the DeleteBlobLogicApp Azure Resource Manager (ARM) template using the Azure portal.

Select the Logic App you deployed.

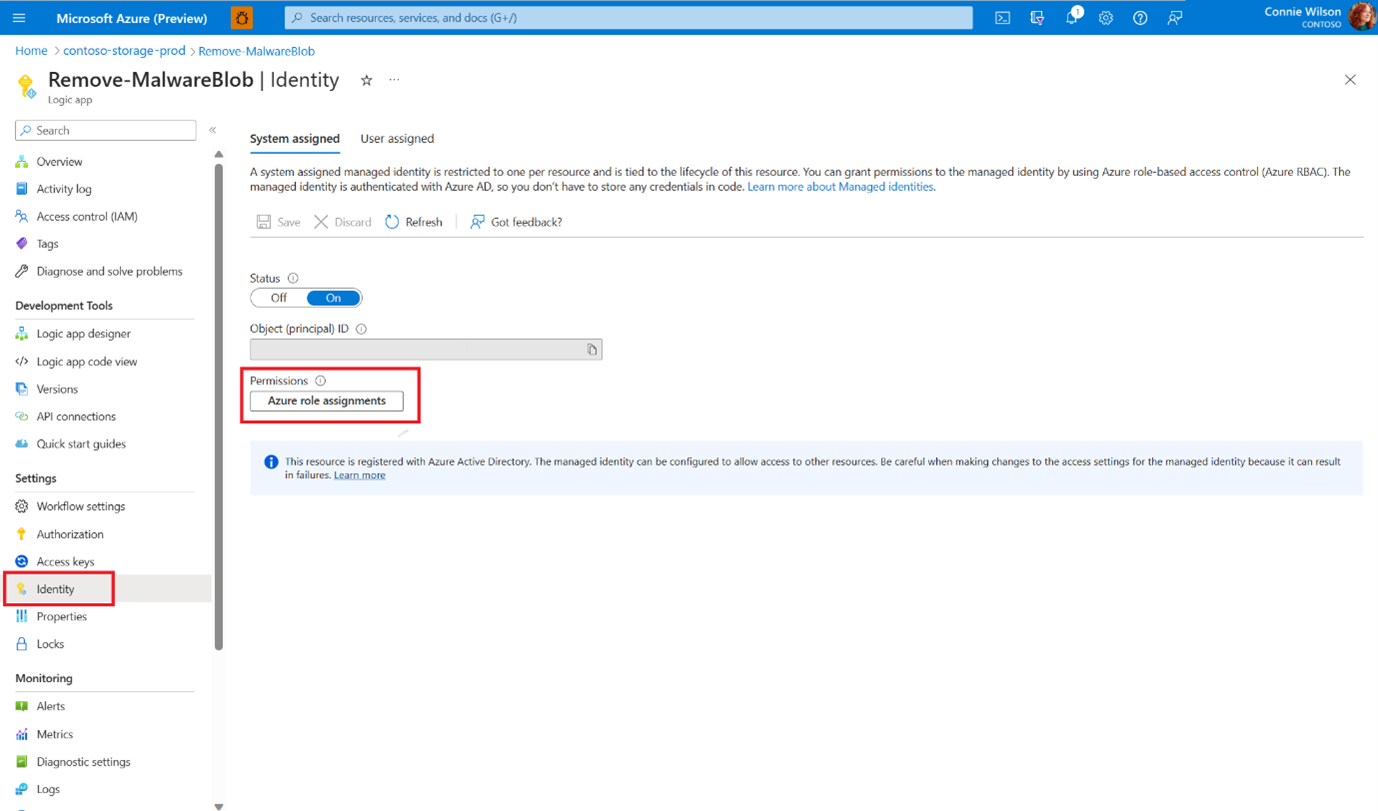

To allow the Logic App to delete blobs from your storage account, add a role assignment:

Go to Identity in the side menu and select Azure role assignments.

Add a role assignment in the subscription level with the Storage Blob Data Contributor role.

Create workflow automation for Microsoft Defender for Cloud alerts:

- Go to Microsoft Defender for Cloud in the Azure portal.

- Go to Workflow automation in the side menu.

- Add a new workflow: In the Alert name contains field, fill in Malicious file uploaded to storage account and choose your Logic app in the Actions section.

- Select Create.

Option 2: Function App based on Event Grid events

A Function App provides high performance with a low latency response time.

Create a Function App in the same resource group as your protected storage account.

Add a role assignment for the Function app identity.

Go to Identity in the side menu, make sure the System assigned identity status is On, and select Azure role assignments.

Add a role assignment in the subscription or storage account levels with the Storage Blob Data Contributor role.

Consume Event Grid events and connect an Azure Function as the endpoint type.

When writing the Azure Function code, you can use our premade function sample - MoveMaliciousBlobEventTrigger, or write your own code to copy the blob elsewhere, then delete it from the source.

For each scan result, an event is sent according to the following schema.

Event message structure

The event message is a JSON object that contains key-value pairs that provide detailed information about a malware scanning result. Here's a breakdown of each key in the event message:

id: A unique identifier for the event.

subject: A string that describes the resource path of the scanned blob (file) in the storage account.

data: A JSON object that contains additional information about the event:

correlationId: A unique identifier that you can use to correlate multiple events related to the same scan.

blobUri: The URI of the scanned blob (file) in the storage account.

eTag: The ETag of the scanned blob (file).

scanFinishedTimeUtc: The UTC timestamp when the scan completed.

scanResultType: The result of the scan, such as Malicious or No threats found.

scanResultDetails: A JSON object containing details about the scan result:

malwareNamesFound: An array of malware names found in the scanned file.

sha256: The SHA-256 hash of the scanned file.

eventType: A string that indicates the type of event, in this case, Microsoft.Security.MalwareScanningResult.

dataVersion: The version number of the data schema.

metadataVersion: The version number of the metadata schema.

eventTime: The UTC timestamp when the event was generated.

topic: The resource path of the Event Grid topic that the event belongs to.

Here's an example of an event message:

{

"id": "aaaa0000-bb11-2222-33cc-444444dddddd",

"subject": "storageAccounts/<storage_account_name>/containers/app-logs-storage/blobs/EICAR - simulating malware.txt",

"data": {

"correlationId": "aaaa0000-bb11-2222-33cc-444444dddddd",

"blobUri": "https://<storage_account_name>.blob.core.windows.net/app-logs-storage/EICAR - simulating malware.txt",

"eTag": "0x000000000000000",

"scanFinishedTimeUtc": "2023-05-04T11:31:54.0481279Z",

"scanResultType": "Malicious",

"scanResultDetails": {

"malwareNamesFound": [

"DOS/EICAR_Test_File"

],

"sha256": "AA11BB22CC33DD44EE55FF66AA77BB88CC99DD00"

}

},

"eventType": "Microsoft.Security.MalwareScanningResult",

"dataVersion": "1.0",

"metadataVersion": "1",

"eventTime": "2023-05-04T11:31:54.048375Z",

"topic": "/subscriptions/<subscription_id>/resourceGroups/<resource_group_name>/providers/Microsoft.EventGrid/topics/<event_grid_topic_name>"

}

By understanding the structure of the event message, you can extract relevant information about the malware scanning result and process it accordingly.

Make your applications and data flows aware of malware scanning results

Malware scanning runs in near real time, and there's usually a short time window between uploading a file and scanning it. Because storage isn't compute, malicious files can't run in your storage. The risk is that users or applications use malicious files and spread them throughout the organization.

You can make your applications and data flows aware of malware scanning results. These methods make sure files aren't used or processed until:

- Scanning finishes

- Scan results are available

- You take appropriate actions based on the results

Applications ingest data based on the scan result

Option 1: Apps checking "Index tag" before processing

Update all applications that use the storage account. Each application checks the scan result for each file, and if the blob Index tag scan result is no threats found, the application reads the blob.

Option 2: Connect your application to a Webhook in Event Grid events

Connect your application to a Webhook in Event Grid events and use those events to trigger relevant processes for files with no threats found scan results. Learn more about using Webhook event delivery and validating your endpoint.

Use an intermediary storage account as a DMZ

Set up an intermediary storage account for untrusted content (DMZ) and direct upload traffic to the DMZ. On the untrusted storage account, enable malware scanning and connect Event Grid and Function App to move only blobs scanned with the "no threat found" result to the destination storage account.

Next step

Learn how to learn about results from malware scanning in Microsoft Defender for Storage.