Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This guide provides step-by-step instructions for using custom named entity recognition (NER) with Azure AI Foundry or the REST API. NER lets you detect and categorize entities in unstructured text—like people, places, organizations, and numbers. With custom NER, you can train models to identify entities specific to your business and adapt them as needs evolve.

To get start, a sample loan agreement is provided as a dataset to build a custom NER model and extract these key entities:

- Date of the agreement

- Borrower's name, address, city, and state

- Lender's name, address, city, and state

- Loan and interest amounts

Note

- This project requires that you have an Azure AI Foundry hub-based project with an Azure storage account (not a Foundry project). For more information, see How to create and manage an Azure AI Foundry hub

- If you already have an Azure AI Language or multi-service resource—whether used on its own or through Language Studio—you can continue to use those existing Language resources within the Azure AI Foundry portal. For more information, see How to use Azure AI services in the Azure AI Foundry portal.

Prerequisites

An Azure subscription. If you don't have one, you can create one for free.

The Requisite permissions. Make sure the person establishing the account and project is assigned as the Azure AI Account Owner role at the subscription level. Alternatively, having either the Contributor or Cognitive Services Contributor role at the subscription scope also meets this requirement. For more information, see Role based access control (RBAC).

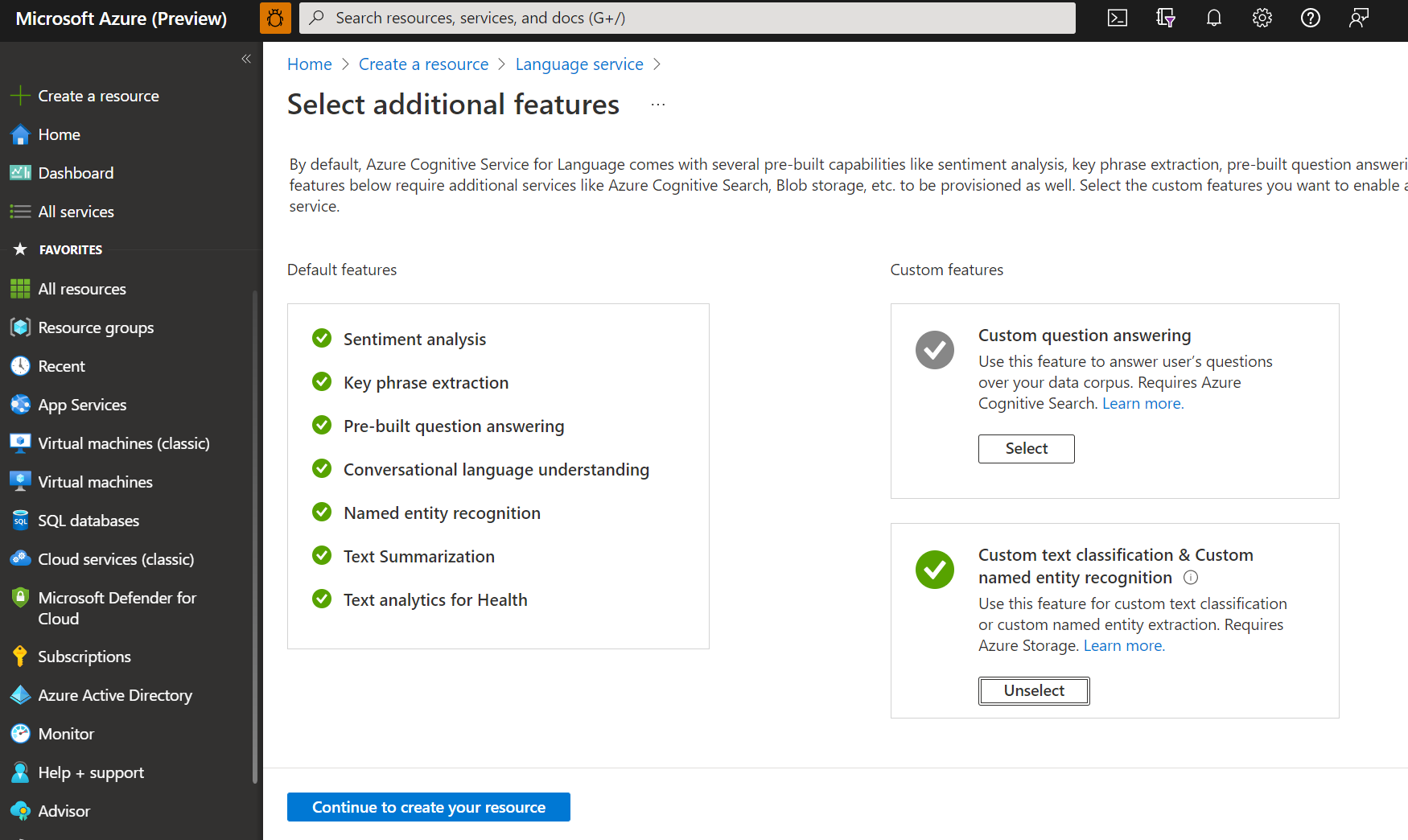

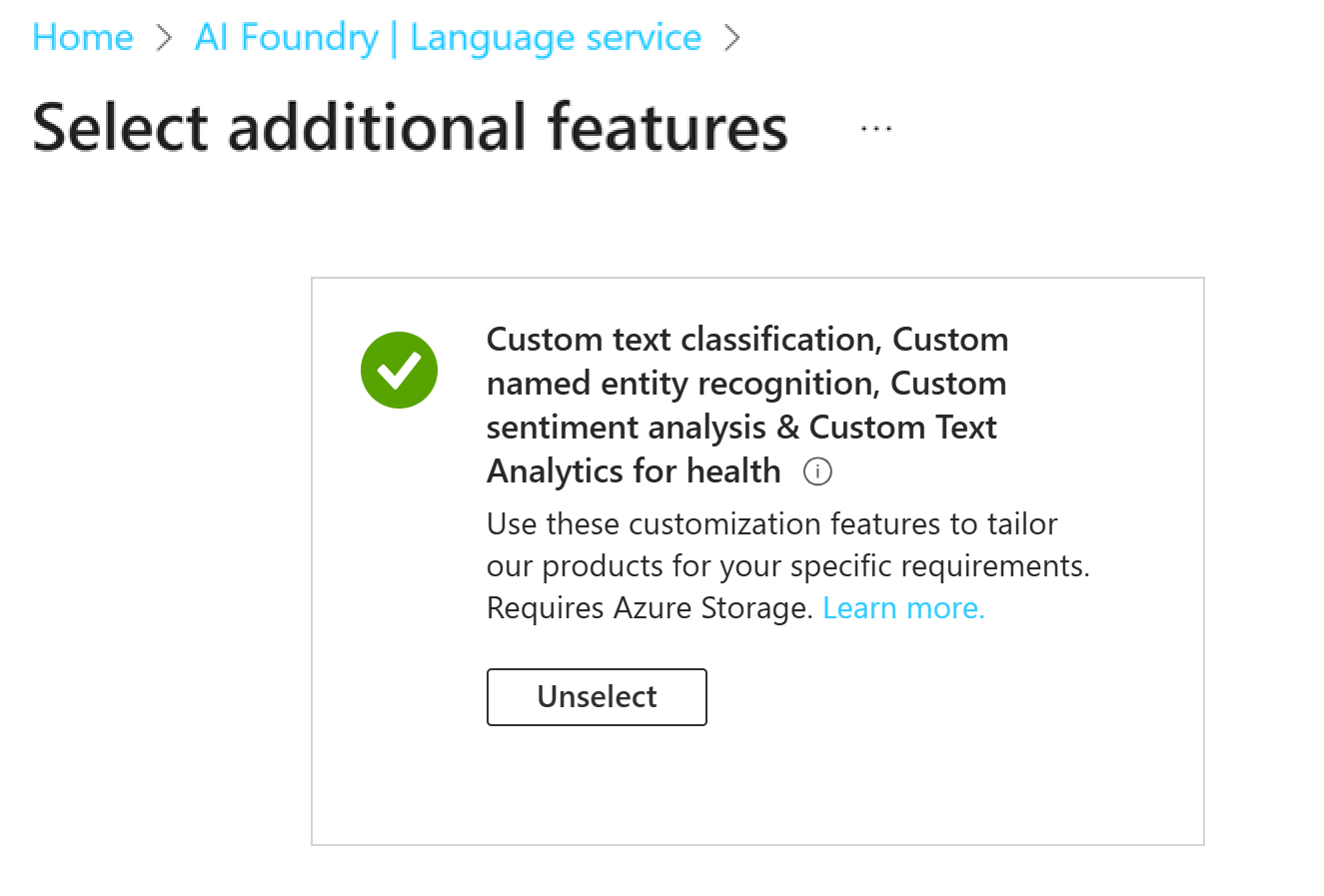

An Azure AI Language resource with a storage account. On the select additional features page, select the Custom text classification, Custom named entity recognition, Custom sentiment analysis & Custom Text Analytics for health box to link a required storage account with this resource:

Note

- You need to have an owner role assigned on the resource group to create a Language resource.

- If you're connecting a preexisting storage account, you should have an owner role assigned to it.

- Don't move the storage account to a different resource group or subscription once linked with the Language resource.

An Azure AI Foundry hub-based project. For more information about Foundry hub-based project, see Create a hub project for Azure AI Foundry.

A custom NER dataset uploaded to your storage container. A custom named entity recognition (NER) dataset is the collection of labeled text documents used to train your custom NER model. You can download our sample dataset for this quickstart. The source language is English.

Step 1: Configure required roles, permissions, and settings

Let's begin by configuring your resources.

Enable custom named entity recognition feature

Make sure the Custom text classification / Custom Named Entity Recognition feature is enabled in the Azure portal.

- Go to your Language resource in the Azure portal.

- From the left side menu, under Resource Management section, select Features.

- Make sure the Custom text classification / Custom Named Entity Recognition feature is enabled.

- If your storage account isn't assigned, select and connect your storage account.

- Select Apply.

Add required roles for your Azure AI Language resource

Go to your storage account or Language resource in the Azure portal.

Select Access Control (IAM) in the left pane.

Select Add to Add Role Assignments, and choose the appropriate role for your account.

- You should have the Cognitive Services Language Owner or Cognitive Services Contributor role assignment for your Language resource.

Within Assign access to, select User, group, or service principal.

Select Select members.

Select your user name. You can search for user names in the Select field. Repeat this step for all roles.

Repeat these steps for all the user accounts that need access to this resource.

Add required roles for your storage account

- Go to your storage account page in the Azure portal.

- Select Access Control (IAM) in the left pane.

- Select Add to Add Role Assignments, and choose the Storage blob data contributor role on the storage account.

- Within Assign access to, select Managed identity.

- Select Select members.

- Select your subscription, and Language as the managed identity. You can search for your language resource in the Select field.

Add required user roles

Important

If you skip this step, you get a 403 error when you try to connect to your custom project. It's important that your current user has this role to access storage account blob data, even if you're the owner of the storage account.

- Go to your storage account page in the Azure portal.

- Select Access Control (IAM) in the left pane.

- Select Add to Add Role Assignments, and choose the Storage blob data contributor role on the storage account.

- Within Assign access to, select User, group, or service principal.

- Select Select members.

- Select your User. You can search for user names in the Select field.

Important

If you have a Firewall or virtual network or private endpoint, be sure to select Allow Azure services on the trusted services list to access this storage account under the Networking tab in the Azure portal.

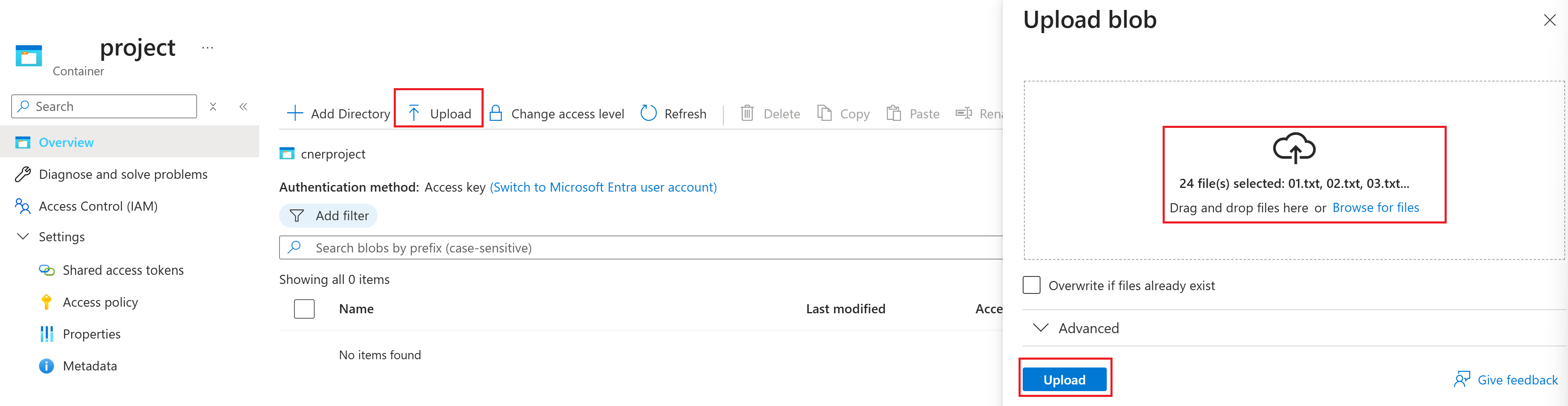

Step 2: Upload your dataset to your storage container

Next, let's add a container and upload your dataset files directly to the root directory of your storage container. These documents are used to train your model.

Add a container to the storage account associated with your language resource. For more information, see create a container.

Download the sample dataset from GitHub. The provided sample dataset contains 20 loan agreements:

- Each agreement includes two parties: a lender and a borrower.

- You extract relevant information for: both parties, agreement date, loan amount, and interest rate.

Open the .zip file, and extract the folder containing the documents.

Navigate to the Azure AI Foundry.

If you aren't already signed in, the portal prompts you to do so with your Azure credentials.

Once signed in, access your existing Azure AI Foundry hub-based project for this quickstart.

Select Management center from the left navigation menu.

Select Connected resources from the Hub section of the Management center menu.

Next choose the workspace blob storage that was set up for you as a connected resource.

On the workspace blob storage, select View in Azure Portal.

On the AzurePortal page for your blob storage, select Upload from the top menu. Next, choose the

.txtand.jsonfiles you downloaded earlier. Finally, select the Upload button to add the file to your container.

Now that the required Azure resources are provisioned and configured within the Azure portal, let's use these resources in the Azure AI Foundry to create a fine-tuned custom Named Entity Recognition (NER) model.

Step 3: Connect your Azure AI Language resource

Next we create a connection to your Azure AI Language resource so Azure AI Foundry can access it securely. This connection provides secure identity management and authentication, as well as controlled and isolated access to data.

Return to the Azure AI Foundry.

Access your existing Azure AI Foundry hub-based project for this quickstart.

Select Management center from the left navigation menu.

Select Connected resources from the Hub section of the Management center menu.

In the main window, select the + New connection button.

Select Azure AI Language from the Add a connection to external assets window.

Select Add connection, then select Close.

Step 4: Fine tune your custom NER model

Now, we're ready to create a custom NER fine-tune model.

From the Project section of the Management center menu, select Go to project.

From the Overview menu, select Fine-tuning.

From the main window, select the AI Service fine-tuning tab and then the + Fine-tune button.

From the Create service fine-tuning window, choose the Custom named entity recognition tab, and then select Next.

In the Create service fine-tuning task window, complete the fields as follows:

Connected service. The name of your language service resource should already appear in this field by default. if not, add it from the drop-down menu.

Name. Give your fine-tuning task project a name.

Language. English is set as the default and already appears in the field.

Description. You can optionally provide a description or leave this field empty.

Blob store container. Select the workspace blob storage container from Step 2 and choose the Connect button.

Finally, select the Create button. It can take a few minutes for the creating operation to complete.

Step 5: Train your model

- From the Getting Started menu, choose Manage data. In the Add data for training and testing window, you see the sample data that you previously uploaded to your Azure Blob Storage container.

- Next, from the Getting Started menu, select Train model.

- Select the + Train model button. When the Train a new model window appears, enter a name for your new model and keep the default values. Select the Next button.

- In the Train a new model window, keep the default Automatically split the testing set from training data enabled with the recommended percentage set at 80% for training data and 20% for testing data.

- Review your model configuration then select the Create button.

- After training a model, you can select Evaluate model from the Getting started menu. You can select your model from the Evaluate you model window and make improvements if necessary.

Step 6: Deploy your model

Typically, after training a model, you review its evaluation details. For this quickstart, you can just deploy your model and make it available to test in the Language playground, or by calling the prediction API. However, if you wish, you can take a moment to select Evaluate your model from the left-side menu and explore the in-depth telemetry for your model. Complete the following steps to deploy your model within Azure AI Foundry.

Select Deploy model from the left-side menu.

Next, select ➕Deploy a trained model from the Deploy your model window.

Make sure the Create a new deployment button is selected.

Complete the Deploy a trained model window fields:

- Deployment name. Name your model.

- Assign a model. Select your trained model from the drop-down menu.

- Region. Select a region from the drop-down menu.

Finally, select the Create button. It may take a few minutes for your model to deploy.

After successful deployment, you can view your model's deployment status on the Deploy your model page. The expiration date that appears marks the date when your deployed model becomes unavailable for prediction tasks. This date is usually 18 months after a training configuration is deployed.

Step 7: Try the Language playground

The Language playground provides a sandbox to test and configure your fine-tuned model before deploying it to production, all without writing code.

- From the top menu bar, select Try in playground.

- In the Language Playground window, select the Custom named entity recognition tile.

- In the Configuration section, select your Project name and Deployment name from the drop-down menus.

- Enter an entity and select Run.

- You can evaluate the results in the Details window.

That's it, congratulations!

In this quickstart, you created a fine-tuned custom NER model, deployed it in Azure AI Foundry, and tested your model in the Language playground.

Clean up resources

If you no longer need your project, you can delete it from the Azure AI Foundry.

- Navigate to the Azure AI Foundry home page. Initiate the authentication process by signing in, unless you already completed this step and your session is active.

- Select the project that you want to delete from the Keep building with Azure AI Foundry.

- Select Management center.

- Select Delete project.

To delete the hub along with all its projects:

Navigate to the Overview tab in the Hub section.

On the right, select Delete hub.

The link opens the Azure portal for you to delete the hub there.

Prerequisites

- Azure subscription - Create one for free

Create a new Azure AI Language resource and Azure storage account

Before you can use custom named entity recognition (NER), you need to create an Azure AI Language resource, which gives you the credentials that you need to create a project and start training a model. You also need an Azure storage account, where you can upload your dataset that is used in building your model.

Important

To get started quickly, we recommend creating a new Azure AI Language resource. Use the steps provided in this article, to create the Language resource, and create and/or connect a storage account at the same time. Creating both at the same time is easier than doing it later.

If you have a preexisting resource that you'd like to use, you need to connect it to storage account. See create project for information.

Create a new resource from the Azure portal

Sign in to the Azure portal to create a new Azure AI Language resource.

In the window that appears, select Custom text classification & custom named entity recognition from the custom features. Select Continue to create your resource at the bottom of the screen.

Create a Language resource with following details.

Name Description Subscription Your Azure subscription. Resource group A resource group that will contain your resource. You can use an existing one, or create a new one. Region The region for your Language resource. For example, "West US 2". Name A name for your resource. Pricing tier The pricing tier for your Language resource. You can use the Free (F0) tier to try the service. Note

If you get a message saying "your login account is not an owner of the selected storage account's resource group", your account needs to have an owner role assigned on the resource group before you can create a Language resource. Contact your Azure subscription owner for assistance.

In the Custom text classification & custom named entity recognition section, select an existing storage account or select New storage account. These values are to help you get started, and not necessarily the storage account values you’ll want to use in production environments. To avoid latency during building your project connect to storage accounts in the same region as your Language resource.

Storage account value Recommended value Storage account name Any name Storage account type Standard LRS Make sure the Responsible AI Notice is checked. Select Review + create at the bottom of the page, then select Create.

Upload sample data to blob container

After you have created an Azure storage account and connected it to your Language resource, you will need to upload the documents from the sample dataset to the root directory of your container. These documents will later be used to train your model.

Download the sample dataset from GitHub.

Open the .zip file, and extract the folder containing the documents.

In the Azure portal, navigate to the storage account you created, and select it.

In your storage account, select Containers from the left menu, located below Data storage. On the screen that appears, select + Container. Give the container the name example-data and leave the default Public access level.

After your container has been created, select it. Then select Upload button to select the

.txtand.jsonfiles you downloaded earlier.

The provided sample dataset contains 20 loan agreements. Each agreement includes two parties: a lender and a borrower. You can use the provided sample file to extract relevant information for: both parties, an agreement date, a loan amount, and an interest rate.

Get your resource keys and endpoint

Go to your resource overview page in the Azure portal

From the menu on the left side, select Keys and Endpoint. You will use the endpoint and key for the API requests

Create a custom NER project

Once your resource and storage account are configured, create a new custom NER project. A project is a work area for building your custom ML models based on your data. Your project is accessed you and others who have access to the Language resource being used.

Use the tags file you downloaded from the sample data in the previous step and add it to the body of the following request.

Trigger import project job

Submit a POST request using the following URL, headers, and JSON body to import your labels file. Make sure that your labels file follow the accepted format.

If a project with the same name already exists, the data of that project is replaced.

{Endpoint}/language/authoring/analyze-text/projects/{projectName}/:import?api-version={API-VERSION}

| Placeholder | Value | Example |

|---|---|---|

{ENDPOINT} |

The endpoint for authenticating your API request. | https://<your-custom-subdomain>.cognitiveservices.azure.com |

{PROJECT-NAME} |

The name for your project. This value is case-sensitive. | myProject |

{API-VERSION} |

The version of the API you're calling. The value referenced here's for the latest version released. See Model lifecycle to learn more about other available API versions. | 2022-05-01 |

Headers

Use the following header to authenticate your request.

| Key | Value |

|---|---|

Ocp-Apim-Subscription-Key |

The key to your resource. Used for authenticating your API requests. |

Body

Use the following JSON in your request. Replace the placeholder values with your own values.

{

"projectFileVersion": "{API-VERSION}",

"stringIndexType": "Utf16CodeUnit",

"metadata": {

"projectName": "{PROJECT-NAME}",

"projectKind": "CustomEntityRecognition",

"description": "Trying out custom NER",

"language": "{LANGUAGE-CODE}",

"multilingual": true,

"storageInputContainerName": "{CONTAINER-NAME}",

"settings": {}

},

"assets": {

"projectKind": "CustomEntityRecognition",

"entities": [

{

"category": "Entity1"

},

{

"category": "Entity2"

}

],

"documents": [

{

"location": "{DOCUMENT-NAME}",

"language": "{LANGUAGE-CODE}",

"dataset": "{DATASET}",

"entities": [

{

"regionOffset": 0,

"regionLength": 500,

"labels": [

{

"category": "Entity1",

"offset": 25,

"length": 10

},

{

"category": "Entity2",

"offset": 120,

"length": 8

}

]

}

]

},

{

"location": "{DOCUMENT-NAME}",

"language": "{LANGUAGE-CODE}",

"dataset": "{DATASET}",

"entities": [

{

"regionOffset": 0,

"regionLength": 100,

"labels": [

{

"category": "Entity2",

"offset": 20,

"length": 5

}

]

}

]

}

]

}

}

| Key | Placeholder | Value | Example |

|---|---|---|---|

api-version |

{API-VERSION} |

The version of the API you're calling. The version used here must be the same API version in the URL. Learn more about other available API versions | 2022-03-01-preview |

projectName |

{PROJECT-NAME} |

The name of your project. This value is case-sensitive. | myProject |

projectKind |

CustomEntityRecognition |

Your project kind. | CustomEntityRecognition |

language |

{LANGUAGE-CODE} |

A string specifying the language code for the documents used in your project. If your project is a multilingual project, choose the language code of most the documents. | en-us |

multilingual |

true |

A boolean value that enables you to have documents in multiple languages in your dataset and when your model is deployed you can query the model in any supported language (not necessarily included in your training documents. See language support for information on multilingual support. | true |

storageInputContainerName |

{CONTAINER-NAME} | The name of your Azure storage container containing your uploaded documents. | myContainer |

entities |

Array containing all the entity types you have in the project and extracted from your documents. | ||

documents |

Array containing all the documents in your project and list of the entities labeled within each document. | [] | |

location |

{DOCUMENT-NAME} |

The location of the documents in the storage container. | doc1.txt |

dataset |

{DATASET} |

The test set to which this file goes to when split before training. For more information, see How to train a model. Possible values for this field are Train and Test. |

Train |

Once you send your API request, you receive a 202 response indicating that the job was submitted correctly. In the response headers, extract the operation-location value. Here's an example of the format:

{ENDPOINT}/language/authoring/analyze-text/projects/{PROJECT-NAME}/import/jobs/{JOB-ID}?api-version={API-VERSION}

{JOB-ID} is used to identify your request, since this operation is asynchronous. You use this URL to get the import job status.

Possible error scenarios for this request:

- The selected resource doesn't have proper permissions for the storage account.

- The

storageInputContainerNamespecified doesn't exist. - Invalid language code is used, or if the language code type isn't string.

multilingualvalue is a string and not a boolean.

Get import job status

Use the following GET request to get the status of your importing your project. Replace the placeholder values below with your own values.

Request URL

{ENDPOINT}/language/authoring/analyze-text/projects/{PROJECT-NAME}/import/jobs/{JOB-ID}?api-version={API-VERSION}

| Placeholder | Value | Example |

|---|---|---|

{ENDPOINT} |

The endpoint for authenticating your API request. | https://<your-custom-subdomain>.cognitiveservices.azure.com |

{PROJECT-NAME} |

The name of your project. This value is case-sensitive. | myProject |

{JOB-ID} |

The ID for locating your model's training status. This value is in the location header value you received in the previous step. |

xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx |

{API-VERSION} |

The version of the API you are calling. The value referenced here is for the latest version released. See Model lifecycle to learn more about other available API versions. | 2022-05-01 |

Headers

Use the following header to authenticate your request.

| Key | Value |

|---|---|

Ocp-Apim-Subscription-Key |

The key to your resource. Used for authenticating your API requests. |

Train your model

Typically after you create a project, you go ahead and start tagging the documents you have in the container connected to your project. For this quickstart, you imported a sample tagged dataset and initialized your project with the sample JSON tags file.

Start training job

After your project is imported, you can start training your model.

Submit a POST request using the following URL, headers, and JSON body to submit a training job. Replace the placeholder values below with your own values.

{ENDPOINT}/language/authoring/analyze-text/projects/{PROJECT-NAME}/:train?api-version={API-VERSION}

| Placeholder | Value | Example |

|---|---|---|

{ENDPOINT} |

The endpoint for authenticating your API request. | https://<your-custom-subdomain>.cognitiveservices.azure.com |

{PROJECT-NAME} |

The name of your project. This value is case-sensitive. | myProject |

{API-VERSION} |

The version of the API you are calling. The value referenced here is for the latest version released. See Model lifecycle to learn more about other available API versions. | 2022-05-01 |

Headers

Use the following header to authenticate your request.

| Key | Value |

|---|---|

Ocp-Apim-Subscription-Key |

The key to your resource. Used for authenticating your API requests. |

Request body

Use the following JSON in your request body. The model will be given the {MODEL-NAME} once training is complete. Only successful training jobs will produce models.

{

"modelLabel": "{MODEL-NAME}",

"trainingConfigVersion": "{CONFIG-VERSION}",

"evaluationOptions": {

"kind": "percentage",

"trainingSplitPercentage": 80,

"testingSplitPercentage": 20

}

}

| Key | Placeholder | Value | Example |

|---|---|---|---|

| modelLabel | {MODEL-NAME} |

The model name that will be assigned to your model once trained successfully. | myModel |

| trainingConfigVersion | {CONFIG-VERSION} |

This is the model version that will be used to train the model. | 2022-05-01 |

| evaluationOptions | Option to split your data across training and testing sets. | {} |

|

| kind | percentage |

Split methods. Possible values are percentage or manual. See How to train a model for more information. |

percentage |

| trainingSplitPercentage | 80 |

Percentage of your tagged data to be included in the training set. Recommended value is 80. |

80 |

| testingSplitPercentage | 20 |

Percentage of your tagged data to be included in the testing set. Recommended value is 20. |

20 |

Note

The trainingSplitPercentage and testingSplitPercentage are only required if Kind is set to percentage and the sum of both percentages should be equal to 100.

Once you send your API request, you’ll receive a 202 response indicating that the job was submitted correctly. In the response headers, extract the location value. It will be formatted like this:

{ENDPOINT}/language/authoring/analyze-text/projects/{PROJECT-NAME}/train/jobs/{JOB-ID}?api-version={API-VERSION}

{JOB-ID} is used to identify your request, since this operation is asynchronous. You can use this URL to get the training status.

Get training job status

Training could take sometime between 10 and 30 minutes for this sample dataset. You can use the following request to keep polling the status of the training job until successfully completed.

Use the following GET request to get the status of your model's training progress. Replace the placeholder values below with your own values.

Request URL

{ENDPOINT}/language/authoring/analyze-text/projects/{PROJECT-NAME}/train/jobs/{JOB-ID}?api-version={API-VERSION}

| Placeholder | Value | Example |

|---|---|---|

{ENDPOINT} |

The endpoint for authenticating your API request. | https://<your-custom-subdomain>.cognitiveservices.azure.com |

{PROJECT-NAME} |

The name of your project. This value is case-sensitive. | myProject |

{JOB-ID} |

The ID for locating your model's training status. This value is in the location header value you received in the previous step. |

xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx |

{API-VERSION} |

The version of the API you are calling. The value referenced here is for the latest version released. See Model lifecycle to learn more about other available API versions. | 2022-05-01 |

Headers

Use the following header to authenticate your request.

| Key | Value |

|---|---|

Ocp-Apim-Subscription-Key |

The key to your resource. Used for authenticating your API requests. |

Response Body

Once you send the request, you’ll get the following response.

{

"result": {

"modelLabel": "{MODEL-NAME}",

"trainingConfigVersion": "{CONFIG-VERSION}",

"estimatedEndDateTime": "2022-04-18T15:47:58.8190649Z",

"trainingStatus": {

"percentComplete": 3,

"startDateTime": "2022-04-18T15:45:06.8190649Z",

"status": "running"

},

"evaluationStatus": {

"percentComplete": 0,

"status": "notStarted"

}

},

"jobId": "{JOB-ID}",

"createdDateTime": "2022-04-18T15:44:44Z",

"lastUpdatedDateTime": "2022-04-18T15:45:48Z",

"expirationDateTime": "2022-04-25T15:44:44Z",

"status": "running"

}

Deploy your model

Generally after training a model you would review it's evaluation details and make improvements if necessary. In this quickstart, you just deploy your model, and make it available for you to try in Language Studio, or you can call the prediction API.

Start deployment job

Submit a PUT request using the following URL, headers, and JSON body to submit a deployment job. Replace the placeholder values below with your own values.

{Endpoint}/language/authoring/analyze-text/projects/{projectName}/deployments/{deploymentName}?api-version={API-VERSION}

| Placeholder | Value | Example |

|---|---|---|

{ENDPOINT} |

The endpoint for authenticating your API request. | https://<your-custom-subdomain>.cognitiveservices.azure.com |

{PROJECT-NAME} |

The name of your project. This value is case-sensitive. | myProject |

{DEPLOYMENT-NAME} |

The name of your deployment. This value is case-sensitive. | staging |

{API-VERSION} |

The version of the API you are calling. The value referenced here is for the latest version released. See Model lifecycle to learn more about other available API versions. | 2022-05-01 |

Headers

Use the following header to authenticate your request.

| Key | Value |

|---|---|

Ocp-Apim-Subscription-Key |

The key to your resource. Used for authenticating your API requests. |

Request body

Use the following JSON in the body of your request. Use the name of the model you to assign to the deployment.

{

"trainedModelLabel": "{MODEL-NAME}"

}

| Key | Placeholder | Value | Example |

|---|---|---|---|

| trainedModelLabel | {MODEL-NAME} |

The model name that will be assigned to your deployment. You can only assign successfully trained models. This value is case-sensitive. | myModel |

Once you send your API request, you’ll receive a 202 response indicating that the job was submitted correctly. In the response headers, extract the operation-location value. It will be formatted like this:

{ENDPOINT}/language/authoring/analyze-text/projects/{PROJECT-NAME}/deployments/{DEPLOYMENT-NAME}/jobs/{JOB-ID}?api-version={API-VERSION}

{JOB-ID} is used to identify your request, since this operation is asynchronous. You can use this URL to get the deployment status.

Get deployment job status

Use the following GET request to query the status of the deployment job. You can use the URL you received from the previous step, or replace the placeholder values below with your own values.

{ENDPOINT}/language/authoring/analyze-text/projects/{PROJECT-NAME}/deployments/{DEPLOYMENT-NAME}/jobs/{JOB-ID}?api-version={API-VERSION}

| Placeholder | Value | Example |

|---|---|---|

{ENDPOINT} |

The endpoint for authenticating your API request. | https://<your-custom-subdomain>.cognitiveservices.azure.com |

{PROJECT-NAME} |

The name of your project. This value is case-sensitive. | myProject |

{DEPLOYMENT-NAME} |

The name of your deployment. This value is case-sensitive. | staging |

{JOB-ID} |

The ID for locating your model's training status. This is in the location header value you received in the previous step. |

xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxx |

{API-VERSION} |

The version of the API you are calling. The value referenced here is for the latest version released. See Model lifecycle to learn more about other available API versions. | 2022-05-01 |

Headers

Use the following header to authenticate your request.

| Key | Value |

|---|---|

Ocp-Apim-Subscription-Key |

The key to your resource. Used for authenticating your API requests. |

Response Body

Once you send the request, you will get the following response. Keep polling this endpoint until the status parameter changes to "succeeded". You should get a 200 code to indicate the success of the request.

{

"jobId":"{JOB-ID}",

"createdDateTime":"{CREATED-TIME}",

"lastUpdatedDateTime":"{UPDATED-TIME}",

"expirationDateTime":"{EXPIRATION-TIME}",

"status":"running"

}

Extract custom entities

After your model is deployed, you can start using it to extract entities from your text using the prediction API. In the sample dataset, downloaded earlier, you can find some test documents that you can use in this step.

Submit a custom NER task

Use this POST request to start a text classification task.

{ENDPOINT}/language/analyze-text/jobs?api-version={API-VERSION}

| Placeholder | Value | Example |

|---|---|---|

{ENDPOINT} |

The endpoint for authenticating your API request. | https://<your-custom-subdomain>.cognitiveservices.azure.com |

{API-VERSION} |

The version of the API you are calling. The value referenced here is for the latest version released. See Model lifecycle to learn more about other available API versions. | 2022-05-01 |

Headers

| Key | Value |

|---|---|

| Ocp-Apim-Subscription-Key | Your key that provides access to this API. |

Body

{

"displayName": "Extracting entities",

"analysisInput": {

"documents": [

{

"id": "1",

"language": "{LANGUAGE-CODE}",

"text": "Text1"

},

{

"id": "2",

"language": "{LANGUAGE-CODE}",

"text": "Text2"

}

]

},

"tasks": [

{

"kind": "CustomEntityRecognition",

"taskName": "Entity Recognition",

"parameters": {

"projectName": "{PROJECT-NAME}",

"deploymentName": "{DEPLOYMENT-NAME}"

}

}

]

}

| Key | Placeholder | Value | Example |

|---|---|---|---|

displayName |

{JOB-NAME} |

Your job name. | MyJobName |

documents |

[{},{}] | List of documents to run tasks on. | [{},{}] |

id |

{DOC-ID} |

Document name or ID. | doc1 |

language |

{LANGUAGE-CODE} |

A string specifying the language code for the document. If this key isn't specified, the service will assume the default language of the project that was selected during project creation. See language support for a list of supported language codes. | en-us |

text |

{DOC-TEXT} |

Document task to run the tasks on. | Lorem ipsum dolor sit amet |

tasks |

List of tasks we want to perform. | [] |

|

taskName |

CustomEntityRecognition |

The task name | CustomEntityRecognition |

parameters |

List of parameters to pass to the task. | ||

project-name |

{PROJECT-NAME} |

The name for your project. This value is case-sensitive. | myProject |

deployment-name |

{DEPLOYMENT-NAME} |

The name of your deployment. This value is case-sensitive. | prod |

Response

You will receive a 202 response indicating that your task has been submitted successfully. In the response headers, extract operation-location.

operation-location is formatted like this:

{ENDPOINT}/language/analyze-text/jobs/{JOB-ID}?api-version={API-VERSION}

You can use this URL to query the task completion status and get the results when task is completed.

Get task results

Use the following GET request to query the status/results of the custom entity recognition task.

{ENDPOINT}/language/analyze-text/jobs/{JOB-ID}?api-version={API-VERSION}

| Placeholder | Value | Example |

|---|---|---|

{ENDPOINT} |

The endpoint for authenticating your API request. | https://<your-custom-subdomain>.cognitiveservices.azure.com |

{API-VERSION} |

The version of the API you are calling. The value referenced here is for the latest version released. See Model lifecycle to learn more about other available API versions. | 2022-05-01 |

Headers

| Key | Value |

|---|---|

| Ocp-Apim-Subscription-Key | Your key that provides access to this API. |

Response Body

The response will be a JSON document with the following parameters

{

"createdDateTime": "2021-05-19T14:32:25.578Z",

"displayName": "MyJobName",

"expirationDateTime": "2021-05-19T14:32:25.578Z",

"jobId": "xxxx-xxxx-xxxxx-xxxxx",

"lastUpdateDateTime": "2021-05-19T14:32:25.578Z",

"status": "succeeded",

"tasks": {

"completed": 1,

"failed": 0,

"inProgress": 0,

"total": 1,

"items": [

{

"kind": "EntityRecognitionLROResults",

"taskName": "Recognize Entities",

"lastUpdateDateTime": "2020-10-01T15:01:03Z",

"status": "succeeded",

"results": {

"documents": [

{

"entities": [

{

"category": "Event",

"confidenceScore": 0.61,

"length": 4,

"offset": 18,

"text": "trip"

},

{

"category": "Location",

"confidenceScore": 0.82,

"length": 7,

"offset": 26,

"subcategory": "GPE",

"text": "Seattle"

},

{

"category": "DateTime",

"confidenceScore": 0.8,

"length": 9,

"offset": 34,

"subcategory": "DateRange",

"text": "last week"

}

],

"id": "1",

"warnings": []

}

],

"errors": [],

"modelVersion": "2020-04-01"

}

}

]

}

}

Clean up resources

When you no longer need your project, you can delete it with the following DELETE request. Replace the placeholder values with your own values.

{Endpoint}/language/authoring/analyze-text/projects/{projectName}?api-version={API-VERSION}

| Placeholder | Value | Example |

|---|---|---|

{ENDPOINT} |

The endpoint for authenticating your API request. | https://<your-custom-subdomain>.cognitiveservices.azure.com |

{PROJECT-NAME} |

The name for your project. This value is case-sensitive. | myProject |

{API-VERSION} |

The version of the API you are calling. The value referenced here is for the latest version released. See Model lifecycle to learn more about other available API versions. | 2022-05-01 |

Headers

Use the following header to authenticate your request.

| Key | Value |

|---|---|

| Ocp-Apim-Subscription-Key | The key to your resource. Used for authenticating your API requests. |

Once you send your API request, you will receive a 202 response indicating success, which means your project has been deleted. A successful call results with an Operation-Location header used to check the status of the job.

Related content

After you create your entity extraction model, you can use the runtime API to extract entities.

As you create your own custom NER projects, use our how-to articles to learn more about tagging, training, and consuming your model in greater detail: