Error generated in Synapse when I query dataverse data in Spark Notebook

Hey there,

I am having an issue querying the data from the Synapse Link for Dataverse in a Spark Notebook within Synapse.

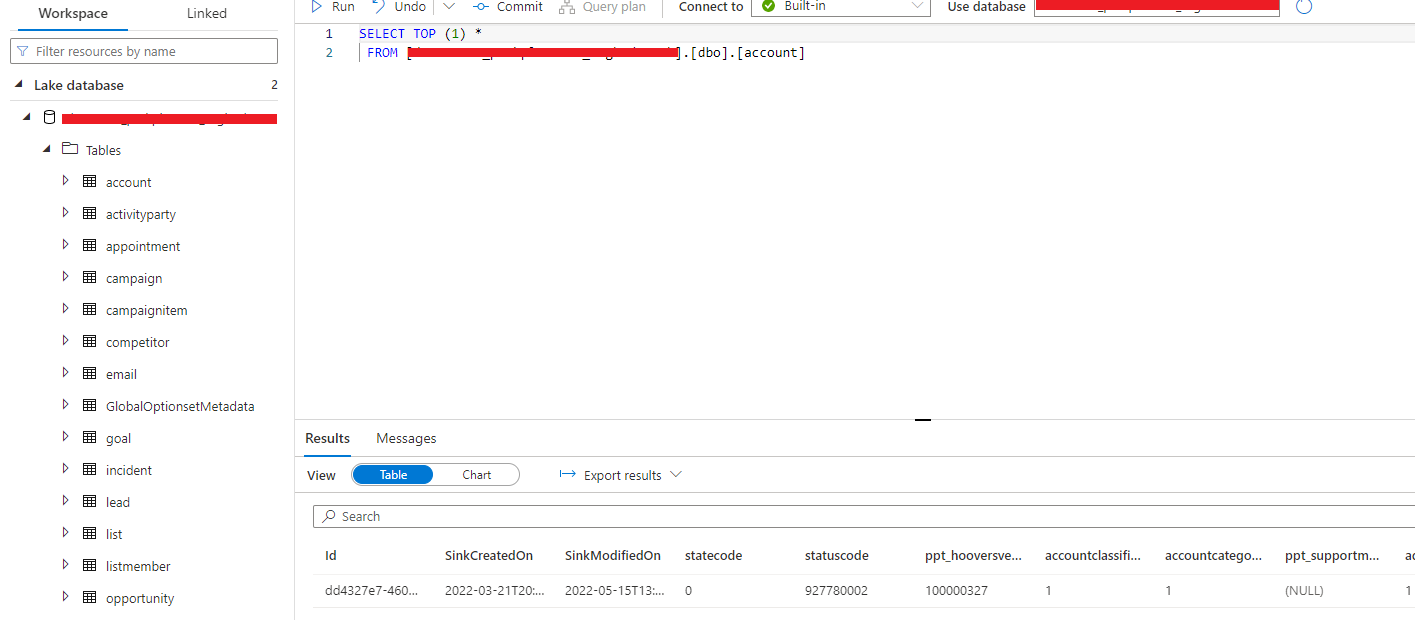

I am able to run a SQL query against the data (which appears in Synapse as a Lake Database) and it returns data. See below

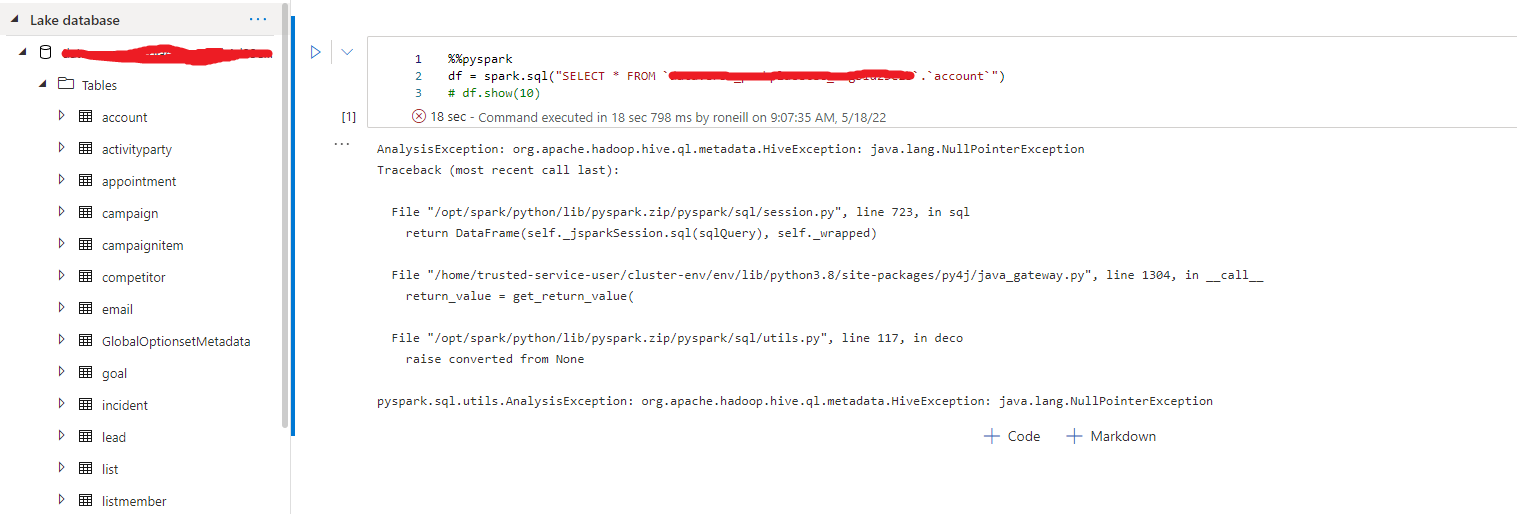

However when I run a query in Spark Notebook I get the following error:

AnalysisException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.NullPointerException

Traceback (most recent call last):

File "/opt/spark/python/lib/pyspark.zip/pyspark/sql/session.py", line 723, in sql

return DataFrame(self._jsparkSession.sql(sqlQuery), self._wrapped)

File "/home/trusted-service-user/cluster-env/env/lib/python3.8/site-packages/py4j/java_gateway.py", line 1304, in call

return_value = get_return_value(

File "/opt/spark/python/lib/pyspark.zip/pyspark/sql/utils.py", line 117, in deco

raise converted from None

pyspark.sql.utils.AnalysisException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.NullPointerException

See Screenshot~:

The Synapse workspace has Owner and Storage Blob Data Contributor access on the storage account.

Anyone have any ideas? I'm really stuck with this one.

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is